our chinese little ups does a nice job: its shutting down the database after 10 minutes whithout electricity. this means, the database and its raid-array will stay in sync. but it also means, half of serversniff is dead, until i'm @home to restart the server. hours, usually.

still this is way faster and less time-consuming than rebuilding raid array and database.

tom

Sunday, December 09, 2007

Sunday, November 11, 2007

Now Reports

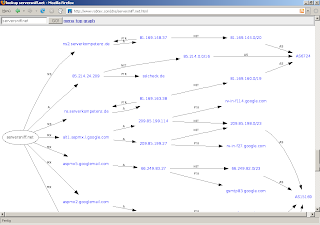

I added a new line of functions to serversniff: Reports. Currently there are NS-Reports, AS-Reports and, new today, Domainreports.

While the domain-report is still under heavy development i feel like its just perfect for visualizing the host- and network-structure of a domain. Try it out with stuff like http://serversniff.de/dnr-norge.no. You can easily identify external hosts or specialized routing. Try it out and have fun and insights.

tom

While the domain-report is still under heavy development i feel like its just perfect for visualizing the host- and network-structure of a domain. Try it out with stuff like http://serversniff.de/dnr-norge.no. You can easily identify external hosts or specialized routing. Try it out and have fun and insights.

tom

Sunday, October 28, 2007

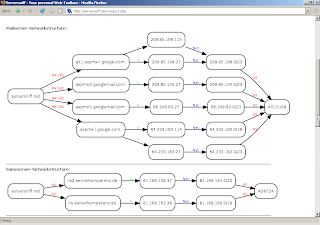

New: AS-Report

I've been rather busy the last weeks - at home, at work, everywhere, i didn't have much time to pet serversniff. I just finished a new script: asreport. This shows you infos about an AS.

You feed it with an IP, a hostname or an AS-number, and serversniff tells you

Those of you familiar with AS-geography might argue that there is no real way to determine, which of the peered AS are uplinks and downlinks. You are right: We are guessing. But try it out: We're usually guessing right.

Like always, this is not entirely my own work.

Serversniff has a routing-database that relys on routing-table from de-cix and routeviews. Thanks to those guys for providing the routes. The routing data is parsed with Marco d'Itri's Zebra-Parser. Thanks Marco! The uplink/downlink-guess is relying on Geoff Hustons CidrReport. Thanks Geoff.

Please note that we do cache whois-data for the networks shown, and the routing-data might also be rather outdated, though we usually update the routing-table once a day.

I tried to fix what annoyed me on other AS-related sites:

Robtex seems to work on new, yet unlinked scripts, see http://www.robtex.com/asmacro/as-tiscalicust.html.

What's left to do is some graphs (i'm working on these) and a maybe bit of speed (it's fast enough for me, though).

If you know any other as-analyzers or if you feel i missed something: drop me a note at thomas.springer@serversniff.net or leave a comment.

tom

You feed it with an IP, a hostname or an AS-number, and serversniff tells you

- Uplink-ASses

- Downlink-ASses

- Known subnets on this as

Those of you familiar with AS-geography might argue that there is no real way to determine, which of the peered AS are uplinks and downlinks. You are right: We are guessing. But try it out: We're usually guessing right.

Like always, this is not entirely my own work.

Serversniff has a routing-database that relys on routing-table from de-cix and routeviews. Thanks to those guys for providing the routes. The routing data is parsed with Marco d'Itri's Zebra-Parser. Thanks Marco! The uplink/downlink-guess is relying on Geoff Hustons CidrReport. Thanks Geoff.

Please note that we do cache whois-data for the networks shown, and the routing-data might also be rather outdated, though we usually update the routing-table once a day.

I tried to fix what annoyed me on other AS-related sites:

Robtex seems to work on new, yet unlinked scripts, see http://www.robtex.com/asmacro/as-tiscalicust.html.

What's left to do is some graphs (i'm working on these) and a maybe bit of speed (it's fast enough for me, though).

If you know any other as-analyzers or if you feel i missed something: drop me a note at thomas.springer@serversniff.net or leave a comment.

tom

Friday, September 28, 2007

finally graphs

I was always jealous on robtex.com for those crazy graphs that took me a few hours to understand completely.

I herby confess publicly: i did a wrapper long time ago for just reaping the robtex-graphic for a domain, to use them for my daily work.

and finally, serversniff comes up with those crazy graphs, too. as i'm always trying to make things better i did split rob's big picture in several smaller graphs, for they are easier to understand.

serversniff uses graphwiz like rob and many others do. seems that there is not really much more there on the market for those directed graphs.

crazy syntax, lousy docs, but works like a charm.

tom

Friday, September 07, 2007

new styles

we're tryin to revamp serversniff a little bit and we started out with a little mascot. dave decided to name it chuchu, and i'm fine with that. no more need to have jealous looks over to the snort-guys, for i always wanted serversniff to have sth like their old mascot snorty. thanks, dave.

serversniff will stay as simple as it is - we're working to make it even simpler. really, a glance over to the guys at whois.sc makes me feel funny: am i really the only one feeling totaly lost with five (!) menubars, different colors and even different font-familys on one page?

i'll keep serversniff mostly black and white. like it or not, i still do.

tom

Thursday, August 30, 2007

donations and raisins...

for somebody asked for an adress to donate: i have a paypal-account, its thomas.springer@gmail.com.

i'd be glad for anything i can get - it will be spent on hardware, connectivity and electricity anyway.

tom

i'd be glad for anything i can get - it will be spent on hardware, connectivity and electricity anyway.

tom

back online again

the domain-database took almost two days outtime - and its back online again. a few scripts like ip-info are still down, but will be online again soon.

i did not only rebuild the database, but also rewired the whole thing and drilled loads of wholes throughout my house to do the network. things take time here, for its not only the network that needs some attention, but also the kids and them.

tom

Wednesday, August 29, 2007

downtime

The domain-database is currently unavailable for i pulled the plug accidentally. I'm on my way to rebuild the db - and i used the opportunity to triple the server's ram. Maybe stuff gets faster this way.

tom

tom

Monday, August 27, 2007

SEO with serversniff

Search-Engine-Spammers discovered that Serversniff.net is a lovely site where they can produce a page with links to their pages to be optimized. How does this work?

If you call a page like FileInfo you get an output with a link to your page or at least with a http://somedomain/file, that google will interpret as link to follow. The spammer do exactly this.

Serversniff uses google-adds to get at least a few bucks supporting the servercosts. Google-Bot does follow every customer, indexing the called page a few seconds later. The spamers use serverfarms or, more likely trojanized machines to call their page 5 times in a row from different machines all over the world. Crazy.

I dropped the links on some pages, and I will put on a noindex-Header in the metatags of all relevant Serversniff-pages, hoping to stop these totally useless crapsters from abusing my machine. Seems like nothing is too crazy on the internet.

tom

If you call a page like FileInfo you get an output with a link to your page or at least with a http://somedomain/file, that google will interpret as link to follow. The spammer do exactly this.

Serversniff uses google-adds to get at least a few bucks supporting the servercosts. Google-Bot does follow every customer, indexing the called page a few seconds later. The spamers use serverfarms or, more likely trojanized machines to call their page 5 times in a row from different machines all over the world. Crazy.

I dropped the links on some pages, and I will put on a noindex-Header in the metatags of all relevant Serversniff-pages, hoping to stop these totally useless crapsters from abusing my machine. Seems like nothing is too crazy on the internet.

tom

Sunday, August 19, 2007

I still don't like solaris.

I recently did a pentest. Whilst torturing a webapplication i came across a file-inclusion-vulnerability, allowing me a glance on /etc/passwd via opening http://tortured-site.xx/safe.php?file=/index.php.

I tried /etc/shadow, but no luck, apache was not running as root. I poked around to see that i was on a solaris-machine. F*ck.

I remembered that years ago, when i used to work as a webmaster, they gave me a sun E something. A horrible fast machine, but i had no clue from solaris. After two weeks I gave up with the awful thing and got a copy of the first beta-version of Suse-Linux for Sun. I don't like Suse either, but after installing Linux on it the Sun turned from a constant source of anger and stress into a horrible fast database-machine. I decided that solaris an me will never get friends.

So, what now? I knew i had to come up with something reasonable, not just a list of accounts to make the customers webmasters really aware of the problem. I tried to find other logs - but no way. Solaris' not Linux, and the Apache was homegrown installed in some funny directory i couldn't find.

Finally I came up with a promising solution: I had the accounts from /etc/password, and it was written there, that they all used /bin/bash. I went for /home//.bash_history. No luck on the first two accounts, but then, bingo, there it was: the admin deploying new software-versions, connecting to the database with user and password on the commandline and finally grepping through the apache-logs.

The webapp suffered from another minor vulnerability: All https-transactions were done via GET-Requests. I already pointed this out in my report that it's a really bad idea to transfer bank-account details and creditcard-numbers and cvcs in an URL, even if it is transferred via HTTPS.

From there on stuff was easy: I used the file-inclusion to download a days logfile, filtered the relevant requests via get and had lovely stuff to present:

What we can learn from this is:

The vulnerable safe.php proved to be a quick-and-dirty solution: 6 lines of custom code killing an otherwise really good webapp. Safe.php is fixed now, but hey, the site is huge, consists of many servers and I'm still keen on getting access to more user-data. Pentesting sometimes reminds me on an interactive version of mistery-stories and whodunits like "The three investigators" when i was young. I still like this part of my job. And, for i'm using zsh instead of bash, err, anybody can tell me how to disable .zsh_history on my machines?

tom

I tried /etc/shadow, but no luck, apache was not running as root. I poked around to see that i was on a solaris-machine. F*ck.

I remembered that years ago, when i used to work as a webmaster, they gave me a sun E something. A horrible fast machine, but i had no clue from solaris. After two weeks I gave up with the awful thing and got a copy of the first beta-version of Suse-Linux for Sun. I don't like Suse either, but after installing Linux on it the Sun turned from a constant source of anger and stress into a horrible fast database-machine. I decided that solaris an me will never get friends.

So, what now? I knew i had to come up with something reasonable, not just a list of accounts to make the customers webmasters really aware of the problem. I tried to find other logs - but no way. Solaris' not Linux, and the Apache was homegrown installed in some funny directory i couldn't find.

Finally I came up with a promising solution: I had the accounts from /etc/password, and it was written there, that they all used /bin/bash. I went for /home/

The webapp suffered from another minor vulnerability: All https-transactions were done via GET-Requests. I already pointed this out in my report that it's a really bad idea to transfer bank-account details and creditcard-numbers and cvcs in an URL, even if it is transferred via HTTPS.

From there on stuff was easy: I used the file-inclusion to download a days logfile, filtered the relevant requests via get and had lovely stuff to present:

- A list with thousands of CreditCards with CVC, Dates and Names.

- A nice record of what the various admins did over the last years. Some proved to be knowledgable and exact, even verified md5-sums of uploaded files to ensure their integrity, some proved to be unix-analphabets like me.

- A few passwords for accounts and the locations of ssh-keyfiles i didn't bother to download.

What we can learn from this is:

- It is a nice idea to make sure your webserver can't read anywhere on your partition outside the webroot.

- It is a nice idea to keep your bash_history-file small. Putting "export HISTFILESIZE=0" in your ~/.bashrc will do the trick.

- Consider all user input as dirty.

The vulnerable safe.php proved to be a quick-and-dirty solution: 6 lines of custom code killing an otherwise really good webapp. Safe.php is fixed now, but hey, the site is huge, consists of many servers and I'm still keen on getting access to more user-data. Pentesting sometimes reminds me on an interactive version of mistery-stories and whodunits like "The three investigators" when i was young. I still like this part of my job. And, for i'm using zsh instead of bash, err, anybody can tell me how to disable .zsh_history on my machines?

tom

Sunday, July 08, 2007

How nice

Usually people use serversniff. And they complain, when somethings completely not workin. Otherwise they don't give a shit. Really.

Ok, no real matter - serversniff is a hobby and the API is there to ease my daily work. Nothing more. But really, sometimes i wish people would care more.

Roelof Temmingh does care occasionally, for he uses parts of serversniff for his evolution. He contributed valuable code and (unknowingly) many many ideas and thoughts, and he's constantly begging for new functions. Roelof, if i had the time, i'd implement far more of your requests.

I had somebody asking for an API-Password recently - and a day after i had a bugreport. I was so glad that someone cared about the bugs that i fixed them right away. When I started with serversniff i had a dream of people bringing great ideas and great scripts helping me earn big $$$. Serversniff's live for around 2 years now - about 98% of the code is still mine, and the $$$ still don't pay for hardware, electricity and servercosts. Maybe i should stop ranting here.

Have a nice week,

tom

Ok, no real matter - serversniff is a hobby and the API is there to ease my daily work. Nothing more. But really, sometimes i wish people would care more.

Roelof Temmingh does care occasionally, for he uses parts of serversniff for his evolution. He contributed valuable code and (unknowingly) many many ideas and thoughts, and he's constantly begging for new functions. Roelof, if i had the time, i'd implement far more of your requests.

I had somebody asking for an API-Password recently - and a day after i had a bugreport. I was so glad that someone cared about the bugs that i fixed them right away. When I started with serversniff i had a dream of people bringing great ideas and great scripts helping me earn big $$$. Serversniff's live for around 2 years now - about 98% of the code is still mine, and the $$$ still don't pay for hardware, electricity and servercosts. Maybe i should stop ranting here.

Have a nice week,

tom

Friday, June 22, 2007

Statistics B

We're far from complete, but getting better:

The DB knows ns/mx-records for 12.829.155 domains. More is added daily until we're complete.

The DB knows currently 1.074.097 nameservers (counted by hostname, not ip!).

tom

The DB knows ns/mx-records for 12.829.155 domains. More is added daily until we're complete.

The DB knows currently 1.074.097 nameservers (counted by hostname, not ip!).

tom

Statistic-Figure A: 19.155.784

I'm not really into statistics and i don't do them regurlarly. But i'd like to remind myself that serversniff.net currently knows more than 19.155.784 unique domains with at least one resolving host. This should be around 15 percent of all known domains on the internet.

we're still adding around 100.000 new domainnames per day, focussing on domains outside of the .com/.net-space. To get a glimpse of domains added take a look at http://tomdns.net - there you can see the newest domains added in realtime.

tom

we're still adding around 100.000 new domainnames per day, focussing on domains outside of the .com/.net-space. To get a glimpse of domains added take a look at http://tomdns.net - there you can see the newest domains added in realtime.

tom

Spam from serversniff.net

Some asshole sent out a spam-wave with random Serversniff.net-Senderadresses. The little sucker put in a return-path and a sender with @serversniff.net. Since i have defined a "catch-all"-mailaccount for serversniff.net, i get all those nice complaints and returned mails. Hundreds of them! Argh.

And yes Sir, no M'am, neither my webserver nor my mailservers are hacked, take a look at the mailheaders:

Return-Path: <stasIsaev@serversniff.net>

Received: (qmail 25562 invoked by uid 0); 22 Jun 2007 12:17:06 +0300

Received: from 220.125.204.181 by post (envelope-from <stasIsaev@serversniff.net>, uid 92) with qmail-scanner-2.01

(clamdscan: 0.90/2659.

Clear:RC:0(220.125.204.181):.

Processed in 0.239443 secs); 22 Jun 2007 09:17:06 -0000

Received: from unknown (HELO ?220.125.204.181?) (220.125.204.181)

by post.ziniur.lt with SMTP; 22 Jun 2007 12:17:04 +0300

Received: from [220.125.204.181] (183.178.25.193)

by stasIsaev@serversniff.net with SMTP;

for <gvitkauskasd@ziniur.lt>; Fri, 22 Jun 2007 19:17:22 +0100

MIME-Version: 1.0

None of these sender-IPs belong to serversniff.net's infrastructure. Seems that it's time to drop the catch-all-adress for serversniff.net.

tom

And yes Sir, no M'am, neither my webserver nor my mailservers are hacked, take a look at the mailheaders:

Return-Path: <stasIsaev@serversniff.net>

Received: (qmail 25562 invoked by uid 0); 22 Jun 2007 12:17:06 +0300

Received: from 220.125.204.181 by post (envelope-from <stasIsaev@serversniff.net>, uid 92) with qmail-scanner-2.01

(clamdscan: 0.90/2659.

Clear:RC:0(220.125.204.181):.

Processed in 0.239443 secs); 22 Jun 2007 09:17:06 -0000

Received: from unknown (HELO ?220.125.204.181?) (220.125.204.181)

by post.ziniur.lt with SMTP; 22 Jun 2007 12:17:04 +0300

Received: from [220.125.204.181] (183.178.25.193)

by stasIsaev@serversniff.net with SMTP;

for <gvitkauskasd@ziniur.lt>; Fri, 22 Jun 2007 19:17:22 +0100

MIME-Version: 1.0

None of these sender-IPs belong to serversniff.net's infrastructure. Seems that it's time to drop the catch-all-adress for serversniff.net.

tom

Monday, June 18, 2007

For the records

For the records: Our update-lag with domainnames is at 443,017 days, and it's increasing. It will continue to increase for some time, for 450 days back was a time where we did bulk-updates: inserting many many new hosts from big lists at once, without to much handling of domains and ips at all. There wasn't too much data or trigger-overhead, and the database was not yet public. I hope to catch up the update-lag to 400 days in about a month and be around 200 days by the end of the 2007. I don't really believe than we can get smaller update-cycles with our current network-bandwidth. But you always have the option to filter outdated records from beeing displayed, regardless if you use serversniff.net, tomdns.net or serversniffs api.

tom

tom

Sunday, June 17, 2007

Serversniff deLux

If you ever wondered what serversniff looks like:

Its located in the attic upstairs from the garage, where it's hot in the summer and cold in the winter. Its made of a cheaposystem with an Athlon 3Something with one Gig RAM and an old Perc2-sc-scsi-controller ripped from a Dell-server and a Proliant-HDD-array from ebay.

I suffered occasional blackouts when lightning stroke, doing damage to the database - so i ordered a brandnew UPS, the small thingy standing right, coming straight from china. We're prepared now.

tom

Its located in the attic upstairs from the garage, where it's hot in the summer and cold in the winter. Its made of a cheaposystem with an Athlon 3Something with one Gig RAM and an old Perc2-sc-scsi-controller ripped from a Dell-server and a Proliant-HDD-array from ebay.

I suffered occasional blackouts when lightning stroke, doing damage to the database - so i ordered a brandnew UPS, the small thingy standing right, coming straight from china. We're prepared now.

tom

Saturday, June 02, 2007

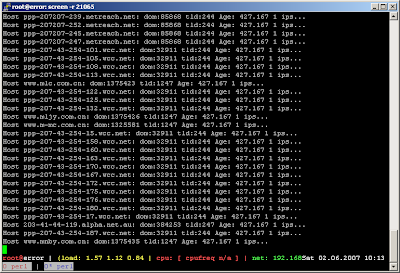

crazy ideas

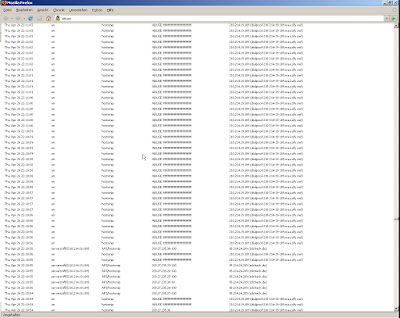

around 500 days ago i had a crazy idea: mapping the net in a database. all domains, all hostnames, all relations of ns- and mx-servers.

i knew a few sites who should have this data but would not really let you look it up - whois.sc and netcraft.com were amongst them. that was all i knew. oh and yes, i knew mysql, i worked with sqlite and microsofts sql-server for years.

i expected this to be an adventure. a textadventure, fun.

and hence, it was fun. the database crashed, servers got blocked, i had errors in my harvesting scripts and i was overwhelmed when i got around 100 million domains with even more hosts at once.

and now i'm sitting on a bunch of data that gets older. my time is limited, my hardware-ressources are as well. data is getting old. i started updating the hostname/IP-entries these days. I should have done this earlyier, i know - but i didn't. time is limited - remember?

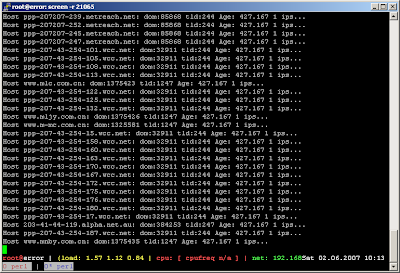

a bit frustrating: the "update-lag", the time between the last update of a hostentry is currently exactly at 427,167 days. most frustrating: it's still increasing.

hmpf.

hmpf.

tom

i knew a few sites who should have this data but would not really let you look it up - whois.sc and netcraft.com were amongst them. that was all i knew. oh and yes, i knew mysql, i worked with sqlite and microsofts sql-server for years.

i expected this to be an adventure. a textadventure, fun.

and hence, it was fun. the database crashed, servers got blocked, i had errors in my harvesting scripts and i was overwhelmed when i got around 100 million domains with even more hosts at once.

and now i'm sitting on a bunch of data that gets older. my time is limited, my hardware-ressources are as well. data is getting old. i started updating the hostname/IP-entries these days. I should have done this earlyier, i know - but i didn't. time is limited - remember?

a bit frustrating: the "update-lag", the time between the last update of a hostentry is currently exactly at 427,167 days. most frustrating: it's still increasing.

hmpf.

hmpf.tom

Tuesday, May 29, 2007

China blocks serversniff.net

Maybe this is something we shouldn't be proud of: Chinese authorities block serversniff completely at their "great firewall of china" - even a few totally passive vhosts with a few pics and texts sitting on serversniff's ip-address get blocked by chinese censorship.

Check yourself here.

Monday, May 21, 2007

Language-Flags and a new check

Occasiónally we see english users referring to the german version of Serversniff and vice versa, germans using the english version. Both versions are functionally identic for most of hour language-specific stuff is stored in a language-file. I added a language-button next to the logo - maybe this will help people getting serversniff in a language that fits their needs best.

I can't really say that i had a lazy weekend, but i ended up throwing together another check: DNS-Report will do a few checks on all nameservers that are in charge for a domain. Basically this is a fraction of Scott Perrys great dnsreport.com - but with added zonetransfer-functionallity. We'll add more functionality some day, doing cache-checks and stuff you won't really find on other sites.

tom

I can't really say that i had a lazy weekend, but i ended up throwing together another check: DNS-Report will do a few checks on all nameservers that are in charge for a domain. Basically this is a fraction of Scott Perrys great dnsreport.com - but with added zonetransfer-functionallity. We'll add more functionality some day, doing cache-checks and stuff you won't really find on other sites.

tom

Saturday, May 19, 2007

New: Filesearch

It's a sunny sunday and i burned my back yesterday working outside building a wooden house for the kids. Time for an indoor-day and a few words.

We recently released a new script sitting in the webserver-part of serversniffs menu: FILE-SEARCH.

FileSearch is a companion to our FILE-INFO: Imagine you want to see all .DOCs on a webserver and you even want to have a look if there is any interesting stuff hidden in these files.

Have a look at this demo showing DOCs on blogspot-blogs.

This script (ab)uses major search-engines. You scriptkids might end up seeing nothing if you (ab)use it too often searching for sites hosting phpBB-security-holes.

I limited the script to 100 results. Drop me a friendly line if (and why!) you feel that this number is too low for your personal needs and i might tell you the secret switch to increase the max-result-count to googles maximum of 1.000 results.

tom.

We recently released a new script sitting in the webserver-part of serversniffs menu: FILE-SEARCH.

FileSearch is a companion to our FILE-INFO: Imagine you want to see all .DOCs on a webserver and you even want to have a look if there is any interesting stuff hidden in these files.

Have a look at this demo showing DOCs on blogspot-blogs.

This script (ab)uses major search-engines. You scriptkids might end up seeing nothing if you (ab)use it too often searching for sites hosting phpBB-security-holes.

I limited the script to 100 results. Drop me a friendly line if (and why!) you feel that this number is too low for your personal needs and i might tell you the secret switch to increase the max-result-count to googles maximum of 1.000 results.

tom.

Thursday, May 17, 2007

New AS-Lookup

Serversniff offers a new script and API-script doing lookups for the Autonomous-System of an IP or hostname.

Backend for this script is currently team cymrus very fine AS-Lookup - this should be fine unless we see a really huge demand for this. We used to operate a pwhois-like BGP-Parser that offered a bit more information, but the work/requestcount-ratio brought us to drop this service. Finally, we brought it back on using the cymru-backend.

tom

Backend for this script is currently team cymrus very fine AS-Lookup - this should be fine unless we see a really huge demand for this. We used to operate a pwhois-like BGP-Parser that offered a bit more information, but the work/requestcount-ratio brought us to drop this service. Finally, we brought it back on using the cymru-backend.

tom

Thursday, May 10, 2007

Back to the net

The database is back, t-offline was kind enough to send a technician fixing the DSL-line. Seems that the DSL-Splitter died. I still think about a colocation in a datacanter for database-machine.

The database is currently readonly. I took the offline-time to do some database-maintenance and found a bad block in a 17GB-sized table.

I'm working to identify the affected rows and will then decide wehter to restore the old backup or restore all but the affected rows.

cheers

tom

The database is currently readonly. I took the offline-time to do some database-maintenance and found a bad block in a 17GB-sized table.

I'm working to identify the affected rows and will then decide wehter to restore the old backup or restore all but the affected rows.

cheers

tom

Wednesday, May 09, 2007

Serversnoffline

Deutsche Telekom is still on strike. No technician yet, day 7 without phone, dsl and internet. Did i mention that i just hate monopolist-companys?

No real wonder, that they are the owners of t-offline.de:

tom

No real wonder, that they are the owners of t-offline.de:

Domain: t-offline.deFrustrated,

Nserver: support.mesch.dtag.de

Nserver: pns.dtag.de

Nserver: secondary006.dtag.net

Status: connect

Changed: 2007-03-06T22:35:34+01:00

[Holder]

Type: ORG

Name: Deutsche Telekom AG, Domainmanagement

Address: Friedrich-Ebert-Allee 140

Pcode: 53113

City: Bonn

Country: DE

Changed: 2005-06-07T10:29:07+02:00

[Admin-C]

Type: PERSON

Name: Marion Schoeberl

Address: Deutsche Telekom AG, Domainmanagement

Address: Friedrich-Ebert-Allee 140

Pcode: 53113

City: Bonn

Country: DE

Changed: 2004-08-24T10:10:06+02:00

[Tech-C]

Type: PERSON

Name: Wolfgang Linke

Organisation: T-Systems CSM GmbH

Address: Feldstr. 34

Pcode: 59872

City: Meschede

Country: DE

Phone: +49 291 90227 7575

Fax: +49 291 90227 7609

Email: domain@mesch.telekom.de

Changed: 2006-01-25T10:52:53+01:00

[Zone-C]

Type: PERSON

Name: Wolfgang Linke

Organisation: T-Systems CSM GmbH

Address: Feldstr. 34

Pcode: 59872

City: Meschede

Country: DE

Phone: +49 291 90227 7575

Fax: +49 291 90227 7609

Email: domain@mesch.telekom.de

Changed: 2006-01-25T10:52:53+01:00

tom

Monday, May 07, 2007

t-offline

Parts of Serversniff.net are offline. Lightning stroke friday evening.

The Domain-Database and some Utilities are hosted at my attic - a diskarray and a desktop-pc running beteween stored camping-equipment, Skates, old books and old electronics and cables, all connected via DSL, operated by german monopolist-telco Deutsche Telekom.

I live in a rural area with occasional thunderstorms and lightning strikes near my house every now and then. I might have got a bad line: Whenever a lightning strikes near my line, i'm offline. No Phone, no DSL, no Internet. It's dead, Jim.

No, don't even think about Telekom monitoring the lines functionality. You'll have to contact their callcenter - by phone or internet. If you're lucky, you get a nice phone-computer, and after repeating "STÖRUNG" again and again unless the shitty machine understands, you will hear that all lines are busy. I had this Friday, i had this Saturday, i had this Sunday - about a hundred calls, unless i finally got through sunday afternoon.

The phone-computer-odyssee continues: you have to enter your phone-number twice. Again, you have to confirm the number by yelling YESYESYES and you have to confirm the AreaCode by yelling YESYESYES and then you get a nice lady that is able to tell you, yeah, the line is dead ("lady, thats why I am calling!!"), and yeah, she will appoint this to a technician, but , oh well, Deutsche Telekom is on strike. Yeah, Tuesday morning.

It'll be like it was the times before: The technican will replace a fuse in the local switch-unit, call in to say that everything's fine again, and the familiy will have phone and internet again.

I do pay around €100 a month for phone and internet - this is defintely not the service i expect for ~1.000 Bucks a year - but since Telekom is still the only one to provide infrastructure where i live, things will stay like this until i find a place to colocate serversniffs backend for electricity-costs only. If you can offer space (no need for a rack, just around 1 MBit connectivity) you're welcome to drop me a mail at thomas.springer@serversniff.net.

Until then you might experience a few outages during thunderstorm-season in our german summer. If you're annoyed by the outage, please keep in mind: It's a free service.

tom

The Domain-Database and some Utilities are hosted at my attic - a diskarray and a desktop-pc running beteween stored camping-equipment, Skates, old books and old electronics and cables, all connected via DSL, operated by german monopolist-telco Deutsche Telekom.

I live in a rural area with occasional thunderstorms and lightning strikes near my house every now and then. I might have got a bad line: Whenever a lightning strikes near my line, i'm offline. No Phone, no DSL, no Internet. It's dead, Jim.

No, don't even think about Telekom monitoring the lines functionality. You'll have to contact their callcenter - by phone or internet. If you're lucky, you get a nice phone-computer, and after repeating "STÖRUNG" again and again unless the shitty machine understands, you will hear that all lines are busy. I had this Friday, i had this Saturday, i had this Sunday - about a hundred calls, unless i finally got through sunday afternoon.

The phone-computer-odyssee continues: you have to enter your phone-number twice. Again, you have to confirm the number by yelling YESYESYES and you have to confirm the AreaCode by yelling YESYESYES and then you get a nice lady that is able to tell you, yeah, the line is dead ("lady, thats why I am calling!!"), and yeah, she will appoint this to a technician, but , oh well, Deutsche Telekom is on strike. Yeah, Tuesday morning.

It'll be like it was the times before: The technican will replace a fuse in the local switch-unit, call in to say that everything's fine again, and the familiy will have phone and internet again.

I do pay around €100 a month for phone and internet - this is defintely not the service i expect for ~1.000 Bucks a year - but since Telekom is still the only one to provide infrastructure where i live, things will stay like this until i find a place to colocate serversniffs backend for electricity-costs only. If you can offer space (no need for a rack, just around 1 MBit connectivity) you're welcome to drop me a mail at thomas.springer@serversniff.net.

Until then you might experience a few outages during thunderstorm-season in our german summer. If you're annoyed by the outage, please keep in mind: It's a free service.

tom

Friday, April 27, 2007

abuse won't pay

Thursday, April 26, 2007

Automated use...

It took two years, but now people start to use serversniff.net automated - despite the crazy redirect-mechanism. Some indian guy or gal put effort into doing a script to milk serversniff.net.

I expected this much earlier. Until now we had only very rudimentary abuse-checks to prevent serversniff.net beeing used for a denial of service against a host or a network. Now we tightened our abuse-checks to catch automated use on some scripts.

We encourage automated use of serversniffs functionalities, sites like www.paterva.com do this already - but please guys, talk to us before. We can make stuff easier for you. No need to write crazy perl-scripts or ugly curl-commandlines. We won't bite, really.

tom

I expected this much earlier. Until now we had only very rudimentary abuse-checks to prevent serversniff.net beeing used for a denial of service against a host or a network. Now we tightened our abuse-checks to catch automated use on some scripts.

We encourage automated use of serversniffs functionalities, sites like www.paterva.com do this already - but please guys, talk to us before. We can make stuff easier for you. No need to write crazy perl-scripts or ugly curl-commandlines. We won't bite, really.

tom

Sunday, March 25, 2007

Fletcher-Checksums added

Following a user-request I added fletcher-checksums (8Bit-Fletcher and 16Bit-Fletcher) to http://serversniff.de/crypt-checksum.php.

I couldn't find any ready-to-use implementation, so recoded it in php. for the sake of having a fletcher-implementation in php online:

I couldn't find any ready-to-use implementation, so recoded it in php. for the sake of having a fletcher-implementation in php online:

# 8 bit-fletcher

# codes an 8bit-fletcher-hash out of an

# hexencoded input-string

# consider this code public domain

#

$x="10111214" #hexecoded input-string

$twochunks=str_split($x,2); # split string into chunks

$lastleft=1; $lastright=0; # init

$modulo=65535; # fletcher-modulus

foreach($twochunks as $char)

{

$lastleft=fmod(($lastleft+hexdec($char)),$modulo); #left

$lastright=fmod(($lastright+$lastleft),$modulo); #right

}

$hexright=dechex($lastright); # make a hexval out of the old dec-val

$hexleft=dechex($lastleft); # make a hexval out of the old dec-val

$fletcher8="$hexright"."$hexleft"; # combine the two

print $fletcher8;

exit;

Twisting and Tuning

We tweaked the ip-info-script: It's working properly with icmp now, while a bug prevented it from workin correctly with icmp. Reinhard tweaked it to add a few flags, but until now i'm not really sure if they do make any sense. We'll see from the log or comments.

We also got rid of the redirect, which seemed to have prevent the page beeing used with konqueror. May there be no more bugs in there...

cheers,

tom

We also got rid of the redirect, which seemed to have prevent the page beeing used with konqueror. May there be no more bugs in there...

cheers,

tom

Friday, March 23, 2007

spinnoffs, volume one or "size DOES matter"

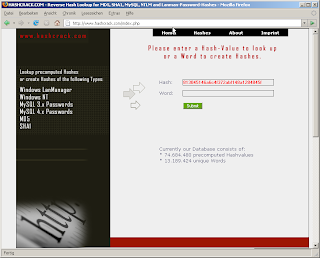

We're currently working on some small serversniff-spinoffs. Very focussed microsites with limited functionality. The first to be launched a few days ago was www.hashcrack.com, a site dedicated to reverse-lookups for several hashtypes.

We know quite a lot of hash-crackers and reverse-lookup-sites - but none of them was the thing we really wanted. Most of them have a limited count of hashes - the biggest we found were >200.000.000 words. There are a few bigger ones supporting crackers like john the ripper or rainbow-tables.

But almost all are limited to MD5-Lookups. Hey guys, it's 2007 and we do it-security. Occasionally i need to reverse other unsalted hashes: MySQL, SHA1 or plain old Windows, be it NTLM or LanMananger. Computingpower and harddrives are cheap - so were working on the ultimate site for database-driven hashlookus, supporting

- MD5

- SHA1

- LanManager

- NTLM

- MySQL 3

- MySQL 4

Hashcrack.com currently lists 11.000.000 Words with ~ 65.000.000 Hashes on a (nearly) static database for we needed some data to experiment with. When we're finished with creating all those hashes we'll simply upgrade hashcrack.com to far more than 1.000.000.000 known hashes, hoping that it'll be of any use.

We welcome any opinions, comments and listings of your favourite reverse-lookup-sites.

tom

Wednesday, March 21, 2007

encryption is online again

our encrypter/decrypter is online again. while reinhard is workin hard to learn cryptography for his ceh-exam he fixed the script and put it online again. thanks reinhard!

reinhard also promised to work on new dns-scripts, too! go boy, go!

and, how nice: spammers read our blog - there were no new requests asking to sell our domainbase to obscure partys during the last week.

cheers,

tom

reinhard also promised to work on new dns-scripts, too! go boy, go!

and, how nice: spammers read our blog - there were no new requests asking to sell our domainbase to obscure partys during the last week.

cheers,

tom

Friday, March 02, 2007

domainnames for sale....

we got quite a few requests to sell our domain-database during the last weeks. we continue to refuse almost all of them.

please: don't bother to ask unless you agree to serversniff's terms of use.

don't bother to ask unless you can't prove in any way that you are willing to abide by this terms.

like anyone else having an emailadress we get more than enough email-spam. we're not really interested in domainname-business and SEO (which would better be spelled SESpam) and we do not consider this business to create any added value for internet-security or the internet itself.

maybe i won't make the world any better, but i'll keep tryin not to make it any worse than it already is.

cheers,

tom

please: don't bother to ask unless you agree to serversniff's terms of use.

don't bother to ask unless you can't prove in any way that you are willing to abide by this terms.

like anyone else having an emailadress we get more than enough email-spam. we're not really interested in domainname-business and SEO (which would better be spelled SESpam) and we do not consider this business to create any added value for internet-security or the internet itself.

maybe i won't make the world any better, but i'll keep tryin not to make it any worse than it already is.

cheers,

tom

Wednesday, February 21, 2007

Back to "normal" operation

We're back to normal operation, all tools should work fine now. Still missing are the "Crypt-Decode", Crypt-Encrypt/Decrypt and the Virus-Check.

Crypt-Decode will come back in a few days. Crypt-Encrypt/Decript too. The Virus-Check will be implemented into the new File-Info-Tool we're working on.

We're currently developing new tools and improving old ones.

The crypto- and encoding-Scripts already support a few new Hash/CRC/Encoding-Algorithms, more will follow.

The SSL-Check will soon support even more ciphers, making it the best SSL-Check we know, checking more ciphers and functionality than any other SSL-Check, be it offline or online.

We're currently working on a sort of "decompiler" for unpacking and decompiling a bunch of different formats ranging from macromedia flash (.swf) or Microsofts Winword (including macros and plaintext) to fullblown java-applets.

We consider a bunch of other applications making live of security-guys easier - but we'd love to have your input: can you think of a (not yet available) tool to ease your work? - Drop us a mail or write a comment - we're open to anything.

tom

Crypt-Decode will come back in a few days. Crypt-Encrypt/Decript too. The Virus-Check will be implemented into the new File-Info-Tool we're working on.

We're currently developing new tools and improving old ones.

The crypto- and encoding-Scripts already support a few new Hash/CRC/Encoding-Algorithms, more will follow.

The SSL-Check will soon support even more ciphers, making it the best SSL-Check we know, checking more ciphers and functionality than any other SSL-Check, be it offline or online.

We're currently working on a sort of "decompiler" for unpacking and decompiling a bunch of different formats ranging from macromedia flash (.swf) or Microsofts Winword (including macros and plaintext) to fullblown java-applets.

We consider a bunch of other applications making live of security-guys easier - but we'd love to have your input: can you think of a (not yet available) tool to ease your work? - Drop us a mail or write a comment - we're open to anything.

tom

Monday, February 19, 2007

serversnoffline

Serversniff was down, and is up again. With a reduced toolset, still. I kicked the old installation in a fit of rage when a bunch of tools failed to compile due to Suse's crazy path-structure.

I always hated Suse, and since years \me refuses to work with any non-debian-system whenever possible. We had to choose Suse for Serversniffs hoster Strato didn't offer anything else a year ago.

Things have changed, Strato offers other distributions. We set up a new system on debian sarge, updated from sid to be more up2date. We did a restore from backup, which worked quite well, despite some upgrade-hassle with mysql (4->5) or some changed network-functionalities or text-output from commandlinetools like ping.

We're still missing the SSL- and the Crypto-Functions, and many API-Functions still fail or behave a bit strange - We will restore these during the next few days - they had to be revamped anyway, so expect more api-functions and public stuff to run a bit smoother when it's all restored. We apologize for any inconvinience.

tom

I always hated Suse, and since years \me refuses to work with any non-debian-system whenever possible. We had to choose Suse for Serversniffs hoster Strato didn't offer anything else a year ago.

Things have changed, Strato offers other distributions. We set up a new system on debian sarge, updated from sid to be more up2date. We did a restore from backup, which worked quite well, despite some upgrade-hassle with mysql (4->5) or some changed network-functionalities or text-output from commandlinetools like ping.

We're still missing the SSL- and the Crypto-Functions, and many API-Functions still fail or behave a bit strange - We will restore these during the next few days - they had to be revamped anyway, so expect more api-functions and public stuff to run a bit smoother when it's all restored. We apologize for any inconvinience.

tom

Thursday, February 15, 2007

Showing hidden Meta-Information in DOC, PDF and more than 100 other file-formats

Did you hear about hidden information in formats like Microsofts .doc?

We did. Yeah. You too. For most of us this is old news. Read here or here, or ask your favouritebig brothersearch-engine.

Everybody should know this - but people everywhere, from government to No Such Agencys keep publishing winword-documents on their websites.

During our penetration tests (and during our internal FileInfo-tests) we came across quite many websites with chatty files, especial .doc. We were fed up to explain this again and again and created a nifty little tool to analyze as many file-formats as possible. If you want to give it a beta-try, check by at Serversniffs "FileInfo". Currently this does ONLY files on webservers, this means the file to be checked has to be on some public webserver. Beware: The check is more than slow and supports only files with a size smaller than 1 MB. It also fails on filenames with blanks or %20. It's BETA. Stuff will get better with our next serverupgrade, which will finally kick SuSe-Linux into /dev/nul.

Examples in Winword, containing a bit of hidden information (and no, we won't post any files with hidden text here!)

It's not only winword that is chatty - we also found loads of PDF-files on websites containing Windows-Usernames of the people who created them. This might get dangerous when you are able to determine the user-structure and naming-convention of an organisation. While many pdfs are clean, there seem to a few PDF-Creator-Tools that we found to be vulnerable by default.

Especially Acrobat Distiller puts realnames or Windows-Usernames into the PDFs Meta-Information: (examples: http://www.verfassungsschutz.de/download/SHOW/symp_2006_abstract_pet.pdf or http://www.nsa.gov/publications/publi00010.pdf, both showing usernames in "Author" and "Creator"-Fields.

This seems to be configurable: Google did a better job, see http://www.google.com/ads/techb2b_news.pdf, while Yahoo puts usernames in many files, like this here http://publisher.yahoo.com/rss/RSS_whitePaper1004.pdf.

Feel free to experiment. FileInfo will display internal Meta-Information for more than 100 File-Formats.

Please drop us a mail you're stumbling over something funny or if you just like the tool- we'll do our best trying to fix stuff or add more file-formats and functionality, and we're waiting for any user-input.

tom

We did. Yeah. You too. For most of us this is old news. Read here or here, or ask your favourite

Everybody should know this - but people everywhere, from government to No Such Agencys keep publishing winword-documents on their websites.

During our penetration tests (and during our internal FileInfo-tests) we came across quite many websites with chatty files, especial .doc. We were fed up to explain this again and again and created a nifty little tool to analyze as many file-formats as possible. If you want to give it a beta-try, check by at Serversniffs "FileInfo". Currently this does ONLY files on webservers, this means the file to be checked has to be on some public webserver. Beware: The check is more than slow and supports only files with a size smaller than 1 MB. It also fails on filenames with blanks or %20. It's BETA. Stuff will get better with our next serverupgrade, which will finally kick SuSe-Linux into /dev/nul.

Examples in Winword, containing a bit of hidden information (and no, we won't post any files with hidden text here!)

It's not only winword that is chatty - we also found loads of PDF-files on websites containing Windows-Usernames of the people who created them. This might get dangerous when you are able to determine the user-structure and naming-convention of an organisation. While many pdfs are clean, there seem to a few PDF-Creator-Tools that we found to be vulnerable by default.

Especially Acrobat Distiller puts realnames or Windows-Usernames into the PDFs Meta-Information: (examples: http://www.verfassungsschutz.de/download/SHOW/symp_2006_abstract_pet.pdf or http://www.nsa.gov/publications/publi00010.pdf, both showing usernames in "Author" and "Creator"-Fields.

This seems to be configurable: Google did a better job, see http://www.google.com/ads/techb2b_news.pdf, while Yahoo puts usernames in many files, like this here http://publisher.yahoo.com/rss/RSS_whitePaper1004.pdf.

Feel free to experiment. FileInfo will display internal Meta-Information for more than 100 File-Formats.

Please drop us a mail you're stumbling over something funny or if you just like the tool- we'll do our best trying to fix stuff or add more file-formats and functionality, and we're waiting for any user-input.

tom

Wednesday, February 14, 2007

The Big Big AXFR

I like the global AXFRS posted in one of the comments to my previos posting. Have a look at Maximilian Dornseifs well-hidden blogentry at http://blogs.23.nu/disLEXia/stories/10092/ to get an idea on how to automate this. Maximilian missed, that there is a hell of secondary-TLDs like co.uk, ac.uk etc. etc.

He also missed that there are a few real big hosters and providers who return far more entrys than many TLDs.

But all in all this blogentry was one of the reasons to create tomdns.net.

Thanks, Maximilian.

tom

He also missed that there are a few real big hosters and providers who return far more entrys than many TLDs.

But all in all this blogentry was one of the reasons to create tomdns.net.

Thanks, Maximilian.

tom

Tuesday, February 13, 2007

Where do i get domains....

Dear kids,

I understand completely that you ask me where to download a list of 100.000.000 domains.

I did, and this drove a few GB traffic to a website. Can you imagine what would happen if i'd post any such url here? Boys, i guess you can. So please, have a look at http://johnny.ihackstuff.com/ and try to have fun with our favourite search-engine as well.

Another option: Since most of the URLs listed on the site are .com/.net, you might also get them directly from verisign. The TOS there are roughly the same as the TOS at www.serversniff.net, in fact i derived serversniffs Terms of Service from Versign, for the last what i wanted to do is support f*cking spammers.

Or: Create something on your own. Something to offer, me or the "community". Then come back on me and ask. I'd be happy to support you with a list of domains if you're able to explain what you're workin on. I'd be happy to team up with you if there is any win-win-situation.

If you're working for a company: I'd be happy to support you with hostnames, domainnames or known IP's, filtered by whatever you want, given that you're willing to agree to our ToS. Drop me a mail and I'm quite sure we'll negotiate a reasonable agreement.

Tom

I understand completely that you ask me where to download a list of 100.000.000 domains.

I did, and this drove a few GB traffic to a website. Can you imagine what would happen if i'd post any such url here? Boys, i guess you can. So please, have a look at http://johnny.ihackstuff.com/ and try to have fun with our favourite search-engine as well.

Another option: Since most of the URLs listed on the site are .com/.net, you might also get them directly from verisign. The TOS there are roughly the same as the TOS at www.serversniff.net, in fact i derived serversniffs Terms of Service from Versign, for the last what i wanted to do is support f*cking spammers.

Or: Create something on your own. Something to offer, me or the "community". Then come back on me and ask. I'd be happy to support you with a list of domains if you're able to explain what you're workin on. I'd be happy to team up with you if there is any win-win-situation.

If you're working for a company: I'd be happy to support you with hostnames, domainnames or known IP's, filtered by whatever you want, given that you're willing to agree to our ToS. Drop me a mail and I'm quite sure we'll negotiate a reasonable agreement.

Tom

Monday, February 05, 2007

before the flood....

We are in the process of updating our domain-database: we just started an insert of roughly 150.000.000 hostnames, bringing our db-system to the limit.

We ceased "regular" spidering and updates for a while to catch up with this bulk-data. Currently we run at a rate of about 1.000.000 new domains per day, which we consider not really bad, but still unsatisfying. We are currently testing a NAS-Array running on 6 SCSI-Disks (currently as an experiment - we will invest in more hardware if this proves to be faster than the current system).

thomas

We ceased "regular" spidering and updates for a while to catch up with this bulk-data. Currently we run at a rate of about 1.000.000 new domains per day, which we consider not really bad, but still unsatisfying. We are currently testing a NAS-Array running on 6 SCSI-Disks (currently as an experiment - we will invest in more hardware if this proves to be faster than the current system).

thomas

Subscribe to:

Posts (Atom)